WordPress Gallery

Ugh, so the WordPress built-in gallery content type seems broken again. I’m not sure it’s worth bothering about. If i fix it locally, it’ll just break again on some future update.

Ugh, so the WordPress built-in gallery content type seems broken again. I’m not sure it’s worth bothering about. If i fix it locally, it’ll just break again on some future update.

Every time this happens, I get confused and lost, and have to rediscover the solution. So here’s a note to future self.

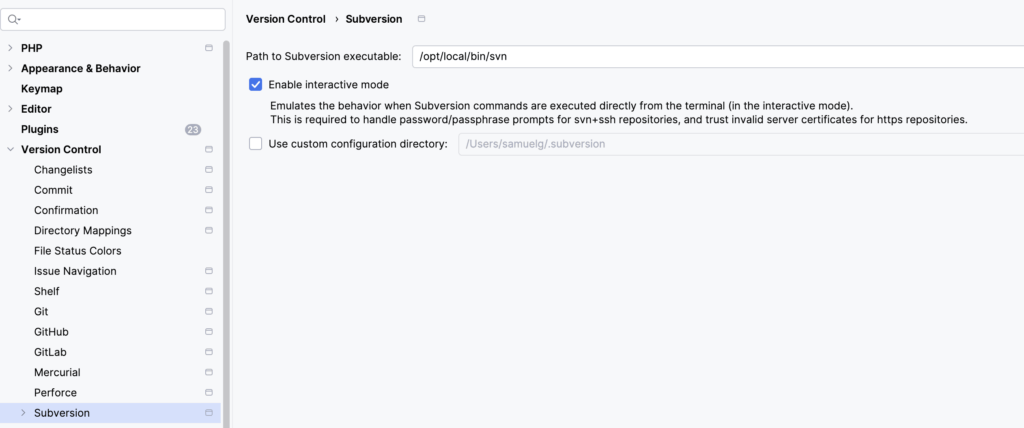

Symptom: PHPStorm stalls on an SVN update, and sits there doing nothing. Network is OK. SSH into server that supplies SVN, watch the WebDAV logs, and there’s nothing even trying to talk to it.

PHPStorm is configured to use an external Subversion client.

Solution: Don’t (necessarily) go and mess around with the settings for PHPStorm. Open a terminal, go to the working set in question, and do an “svn up” from the command line. This is where you’ll discover that SVN has either identified the server certificate as expired or updated, and it’ll ask you to approve the certificate (in that latter case). Log in with your credential again. Now it’ll all be OK again.

The Department of Water and Power is doing work near the office, and over the weekend, there was a sustained power outage. I came in Monday to shrieking UPSes and had to power up the firewall and a few other machines. It was the normal stupid kind of stuff.

We have a few virtual servers out in “the cloud,” and we use point-to-point VPNs to make them seem local to our network. Those VPNs also needed restarting.

Through the course of the day, however, one VPN connection kept unceremoniously disconnecting. Looking at logs on the various servers was unenlightening. Everything was running normally, other than the surprise disconnects.

In the evenings, I’ve been watching the old Grenada TV/Jeremy Brett Sherlock Holmes series, so I had to apply Holmes’ deductive process. The virtual servers had experienced no changes except being disconnected, so I needed to focus on the firewall. The firewall had experienced no change, except being restarted. What could have happened?

I finally found a configuration that was incorrect (it was a netmask that was insufficiently restrictive, allowing devices not on the VPN to collide with VPN IP addresses). I fixed the netmask, and the VPN has been up and stable ever since.

But how could this be? It had been running properly literally for years. It had to be something to do with the power outage. But if that had corrupted the configuration, it wouldn’t have been a single IP netmask changing. “[W]hen you have eliminated the impossible, whatever remains, however improbable, must be the truth.” The bad configuration file could not have been in use.

The best theory is that the configuration file had been (accidentally?) modified at some point in the past, but never loaded. When the firewall was restarted, it loaded this modified configuration for the first time.

The big archway rose went over in the wind. So we spent the afternoon pruning it back and trying to get the situation under control.

I use Time Machine for my local desktop backups. It’s a nice solution. It sits there quietly backing stuff up, keeping multiple revisions of files, and even keeping it all encrypted so if the external drive gets swiped it’s not going to be easy to get at the data.

Of course, it’s no substitute for a revision control system for code, nor is it good for situations where the office gets annihilated due to stray meteorite or drone strike. It’s not a complete solution, but it’s part of a broader collection of solutions.

Today I was reminded of some of the limitations. I used Time Machine to migrate to a new machine. That’s a pretty sweet process. You wait for a few hours of disk read time, and suddenly a new machine is populated with all your old settings, applications, data, and so on from your old machine.

But I found some things that weren’t quite right. Most of them had to do with processes that keep open files or databases, and don’t get backed up in a clean fashion.

That’s all thus far. Nothing too surprising, but a good reminder. Just because you’re backing up, doesn’t necessarily mean you’re backing up stuff in a restorable state!