Fifteen years ago, I worked on a blog that was hosted via Blogger.com (aka blogspot.com aka Google). We had a custom domain name for the blog and everything. It was pretty cool.

Now, many years later, the domain name is finally set to expire. We haven’t touched that blog in eleven years, but it still seems a shame for the content to just vanish. So I thought about making a static copy to host somewhere.

Google makes cloning one of these blogs difficult. They do, however, give you a backup/download capability. I went through re-activating the Google account that was tied to the blog, giving all sorts of identifying information and getting verification emails and texts. That done, I initiated the process to backup the blog, and shortly thereafter received an email that my download was ready. However, now Google is absolutely certain I’m not who I say I am (even with verification emails and texts), and their security locked me out of the account. Also, when I read up on the subject, even if I could download it, their site backup is an XML bundle that only works for reimporting to their blog system anyway.

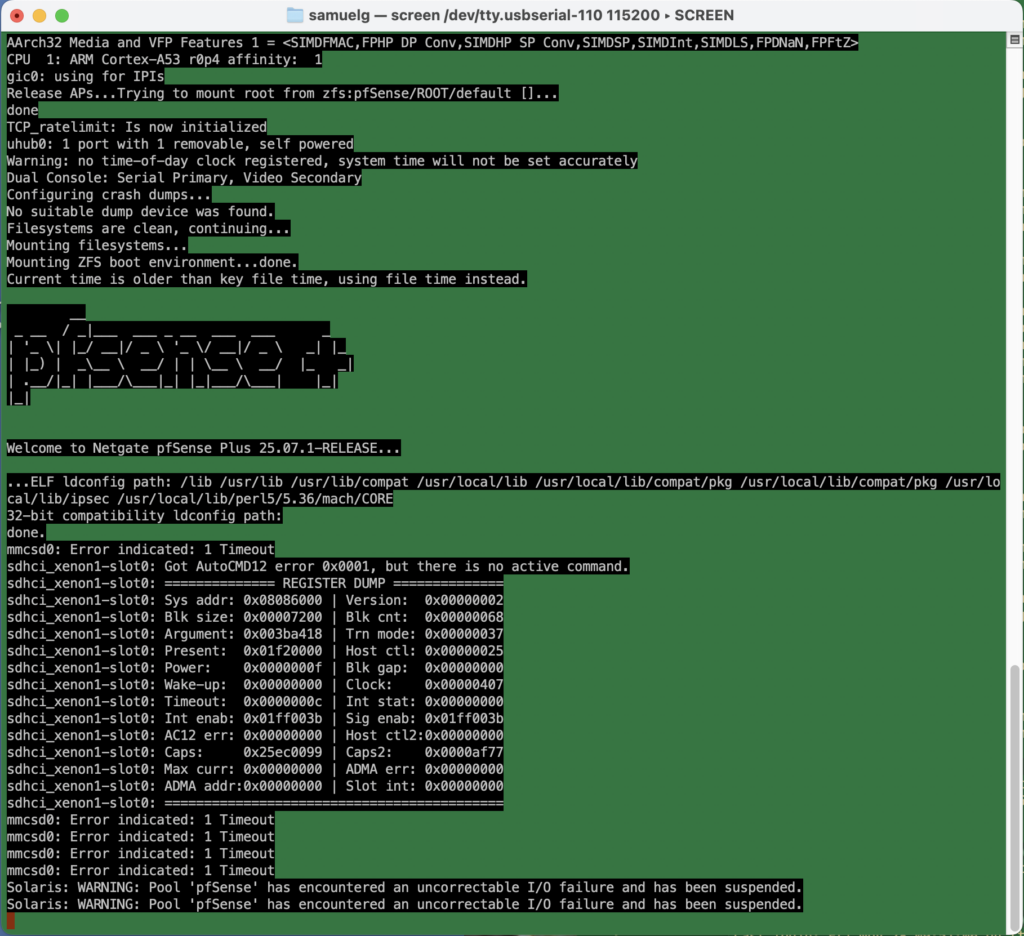

So I thought I’d use the good old standby wget to build a static copy. I tried:

wget --mirror -w 2 -p --html-extension --convert-links --restrict-file-names=windows http://www.myurl.com

Yes, this site was so old that we didn’t use SSL… Still, Google stores the assets off in a bunch of other subdomains, and I was unable to come up with the correct syntax to allow wget to follow those. I’d get the pages, but everything still linked to the Google servers for the assets. That wasn’t going to work.

So next I used the old, powerful F/OSS friend, httrack. My first attack was as follows:

httrack "http://www.myurl.com/" \

-O "myurl-offline" \

-%v \

--robots=0 \

"+www.myurl.com/*" \

"+*.blogspot.com/*" \

"+*.bp.blogspot.com/*" \

"+*.googleusercontent.com/*" \

"+*.jpg +*.jpeg +*.png +*.gif +*.webp" \

"+*.css +*.js" \

"+*.mp4 +*.webm" \

"-*/search?updated-max=*"

This worked — but a little too well. This blog was part of a community of sites, many of which were hosted elsewhere on blogspot. The cloning was slow. Then I noticed it had used up 2G of disk space, whereupon I discovered that I was happily making static copies of twelve other blogs from that community, and possibly more to come! I interrupted the process, and tried again removing the blank check for blogspot sites:

httrack "http://www.myurlcom/" \

-O "myurl-offline" \

-%v \

--robots=0 \

"+www.myurl.com/*" \

"+*.bp.blogspot.com/*" \

"+*.googleusercontent.com/*" \

"+*.jpg +*.jpeg +*.png +*.gif +*.webp" \

"+*.css +*.js" \

"+*.mp4 +*.webm" \

"-*/search?updated-max=*"

This was successful!

I now have a static version of the site. It’s not perfect; some references like the user profile links still point at blogspot. But if I want to be able to post the static site somewhere, I can do that, and it will be sufficiently usable that people can still experience the postings and articles.