So, it was another plugin-gets-updated-and-the-site-crashes situation. It’s not exactly the fault of the plug-in. It’s WordPress being stupid about security.

As I wrote back in 2019, I have WordPress automatically updating itself and its plugins using a cron job that uses the magical Word Press CLI. Notably, this update process runs as a different user than the web server. This is by design. I want to minimize the number of directories where the web server has write permissions — especially, I don’t want it being able to write in the directories containing code. This is kind of basic stuff. If someone can abuse a bug in the core or a plugin to write a file in the web tree, they could do all sorts of mischief even without escalating privileges. Denying the web server write access to those areas is a simple mitigation that prevents a whole class of attacks.

WordPress, however, was written with the belief that it should be able to write files wherever it damn well pleases. The idea is a naïve user gives WordPress full write access on their server, or their FTP credentials to their host, or their ssh username and password [!!], and then a lot of functionality is simplified. Once the web server has privileges to write everywhere, it’s easy to give the user the ability to install, update, edit, and remove plugins and themes directly from the web interface. Very convenient! Especially if you don’t have trust issues like I do.

Now, because of the way content is uploaded and plugins work, there are always going to need to be directories where WordPress has write access. That’s fine. I can protect some of those from being a problem by setting directives in the web server to prevent code execution.

There’s a lot of infrastructure to support WordPress’ profligate write permissions. One component of this is an internal function WP_Filesystem that creates a global abstraction of the filesystem. Once that function is called, plugins or themes or whatever can call methods on the global $wp_filesystem variable to interact with the filesystem, while behind the scenes these interactions could be directly, over FTP, over ssh, or other protocols, depending on how the system is set up. Instead of calling file_put_contents(...), for example, the plugin author calls $wp_filesystem->put_contents(...), and doesn’t have to worry about the details of which protocol is used.

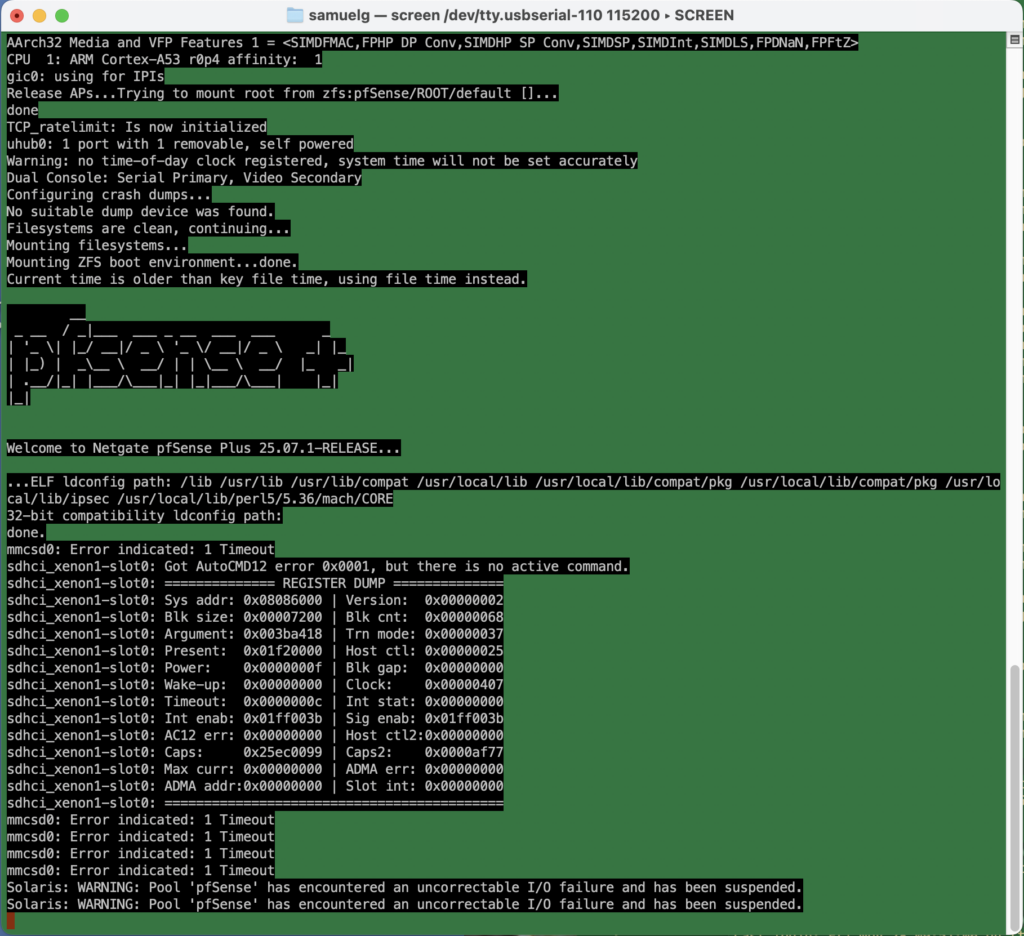

The WP_Filesystem function works by calling the function get_filesystem_method in wp-admin/includes/file.php, which tests different ways of writing files. Here’s where I got screwed. To see if it can write directly to the filesystem, this method tries to write a temporary file, and if this succeeds, checks the ownership of the created file. It compares that to what it considers the WordPress file owner, which is determined by looking at the ownership of wp-admin/includes/file.php. If that fails, it moves on to the next protocol.

So in my case, get_filesystem_method didn’t think it could access the filesystem directly, because wp-admin/includes/file.php was not owned by the web server user. So it moved on to try to update via FTP, ssh, etc, and all failed. It then gracefully threw an error that took down the whole site.

Now the question is why this plugin update needed write permissions anyway? The files making up the plugin were installed successfully by my upgrade script. It turns out that the plugin had a new stylesheet in an scss file, and on the first run it was trying to compile it. I’ll grant that that’s a reasonable case. But the directory where it wanted to put that compiled css was writable! It just never got to that point, because of the abstraction layer.

The slightly ridiculous solution to this problem was to change the ownership of

wp-admin/includes/file.php to the web server user, load the main page of the web site to let it generate the css, and then change the permissions on that file back. Stupid, stupid, stupid.